WARNING! The fix implemented below will leave your data warehouse database in an unsupported state. This may lead to data corruption, and you may have to restore from a backup. Microsoft will not support such direct edits of the database, and I cannot be held responsible for any consequences. I recommend you open a case with Microsoft if you are experiencing these problems.

Following an internal upgrade from DPM 2012 R2 to 2016, I replaced the appropriate DPM management packs in SCOM, upgrading them to version 5.0.1300.0 (released on 26/10/2016), and monitoring works perfectly. However, there were persistent errors logged on the management servers, relating to the data warehouse:

Event ID: 31565

Failed to deploy Data Warehouse component. The operation will be retried.

Exception ‘DeploymentException’: Failed to perform Data Warehouse component deployment operation: Install; Component: DataSet, Id: ’34f57e9b-2777-8c69-a5a6-fbc8d0f1824f’, Management Pack Version-dependent Id: ‘e3a96ce5-bfb2-07b8-ef66-4c711a6606c7’; Target: DataSet, Id: ‘579fd046-1d03-4e54-9c4e-b3534a06a704’. Batch ordinal: 8; Exception: Violation of UNIQUE KEY constraint ‘UN_StandardDatasetAggregationStorage_BaseTableName’. Cannot insert duplicate key in object ‘dbo.StandardDatasetAggregationStorage’. The duplicate key value is (tbl_DPM_BackupEvents).

The statement has been terminated.

One or more workflows were affected by this.

Workflow name: Microsoft.SystemCenter.DataWarehouse.Deployment.Component

Instance name: Data Warehouse Synchronization Service

and

Event ID: 31565

Failed to deploy Data Warehouse component. The operation will be retried.

Exception ‘DeploymentException’: Failed to perform Data Warehouse component deployment operation: Install; Component: DataSet, Id: ‘6d9addfa-7b4c-4536-9fcc-ad6af21f835c’, Management Pack Version-dependent Id: ‘9e315942-a99b-8bb9-56d9-712f601901e7’; Target: DataSet, Id: ‘e13dde51-363c-47b2-8133-f892de58d3d0’. Batch ordinal: 8; Exception: Failed to rename staging table as part of dataset maintenance. sp_rename returned ‘2627’

Cannot grant, deny, or revoke permissions to sa, dbo, entity owner, information_schema, sys, or yourself.

Cannot grant, deny, or revoke permissions to sa, dbo, entity owner, information_schema, sys, or yourself.

One or more workflows were affected by this.

Workflow name: Microsoft.SystemCenter.DataWarehouse.Deployment.Component

Instance name: Data Warehouse Synchronization Service

Initially, as monitoring was working, I was content with leaving these data warehouse problems alone: we rarely access the data warehouse and have never done so for DPM, and I assume that Microsoft are working on a fix. However, we recently ran into some other data warehouse problems, so along the way I wanted to clean these up.

The problem was caused by old datasets being left in the data warehouse database by the DPM 2012 R2 management pack. This wouldn’t usually be a problem, but the same table names are used by the DPM 2016 MP, and this causes conflicts that prevented the new MP from being able to deploy itself. The solution was to remove the old DPM 2012 R2 datasets, which I no-longer needed, using a script on Mihai Sarbulescu’s blog. Although the script is described as being for SCOM 2007 R2, I ran it against SCOM 2016 without any difficulties (although I had to fix a small typo :p).

Evidence of the problem is the events mentioned above and output from the below query where there are zeroes in the InstallCompletedInd column for DPM datasets. This indicates failed deployments for DPM datasets to the database.

USE OperationsManagerDW

SELECT * FROM dbo.Dataset ORDER BY InstalledDateTime DESC

WARNING! Again, the below fix is unsupported by Microsoft, and I can’t be held responsible for this or any other consequences. You will also lose your old DPM data from the data warehouse database by executing the below.

The steps to my solve my problem were:

- Stop the healthservice, cshost and omsdk services on all management servers.

- Take a backup of the data warehouse database.

- Execute the script to remove the old DPM datasets (see below).

- Start the healthservice, cshost and omsdk services on all management servers.

- Observe that, over the next few minutes, the DPM 2016 data warehouse components are automatically deployed, by examining the

dbo.Dataset table (see above).

The script to remove the old DPM datasets is below. Full credit goes to Mihai Sarbulescu. Just remember to update the @MGName variable.

USE OperationsManagerDW

DECLARE

@DataSetLike NVARCHAR(255),

@MGName NVARCHAR(255),

@DataSetId UNIQUEIDENTIFIER,

@MGid INT,

@FetchStatus INT,

@AgId INT

SET @MGName = N'Your management group name'

SET @DataSetLike = N'DPM'

DECLARE Cur CURSOR LOCAL FOR

SELECT DataSetID

FROM DataSet

WHERE DatasetDefaultName LIKE (@DataSetLike + '%')

OPEN Cur

FETCH NEXT FROM Cur INTO @DataSetId

SET @FetchStatus = @@FETCH_STATUS

SET @MGid = (

SELECT ManagementGroupRowId

FROM ManagementGroup

WHERE ManagementGroupDefaultName = @MGName

)

WHILE (@FetchStatus = 0) BEGIN

SELECT @AgId = min(StandardDatasetAggregationStorageRowId)

FROM StandardDatasetAggregationStorage

WHERE DatasetId = @DataSetId

PRINT N'Agid = ' + Cast(@Agid AS VARCHAR)

WHILE (@AgId IS NOT NULL) BEGIN

DELETE

FROM StandardDatasetAggregationStorageIndex

WHERE StandardDatasetAggregationStorageRowId = @AgId

PRINT N'Deleting from StandardDataSetAggregationStorageIndex: ' + Cast(@DataSetId AS NVARCHAR(50))

DELETE

FROM StandardDatasetAggregationStorage

WHERE StandardDatasetAggregationStorageRowId = @AgId

PRINT N'Deleting from StandardDataSetAggregationStorage: ' + Cast(@DataSetId AS NVARCHAR(50))

SELECT @AgId = min(StandardDatasetAggregationStorageRowId)

FROM StandardDatasetAggregationStorage WHERE DatasetId = @DataSetId

PRINT N'Agid = ' + Cast(@Agid AS VARCHAR)

END

DELETE

FROM ManagementGroupDataset

WHERE

DatasetId = @DataSetId AND

ManagementGroupRowId = @MGid

PRINT N'Deleting from ManagementGroupDataset: ' + Cast(@DataSetId AS NVARCHAR(50))

DELETE

FROM StandardDataset

WHERE DatasetId = @DataSetId

PRINT N'Deleting from StandardDataSet: ' + Cast(@DataSetId AS NVARCHAR(50))

DELETE

FROM StandardDatasetAggregationStorage

WHERE DatasetId = @DataSetId

PRINT N'Deleting from StandardDataSetAggregationStorage: ' + Cast(@DataSetId AS NVARCHAR(50))

DELETE

FROM StandardDatasetAggregationHistory

WHERE DatasetId = @DataSetId

PRINT N'Deleting from StandardDataSetAggregationHistory: ' + Cast(@DataSetId AS NVARCHAR(50))

DELETE

FROM StandardDatasetAggregation

WHERE DatasetId = @DataSetId

PRINT N'Deleting from StandardDataSetAggregation: ' + Cast(@DataSetId AS NVARCHAR(50))

DELETE

FROM StandardDatasetTableMap

WHERE DatasetId = @DataSetId

PRINT N'Deleting from StandardDataSetTableMap: ' + Cast(@DataSetId AS NVARCHAR(50))

DELETE

FROM Dataset

WHERE DatasetId = @DataSetId

PRINT N'Deleting from DataSet: ' + Cast(@DataSetId AS NVARCHAR(50))

FETCH NEXT FROM Cur INTO @DataSetId

SET @FetchStatus = @@FETCH_STATUS

END

CLOSE Cur

DEALLOCATE Cur

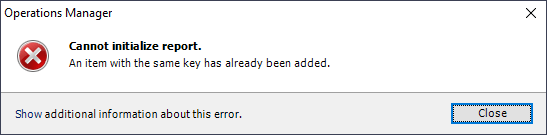

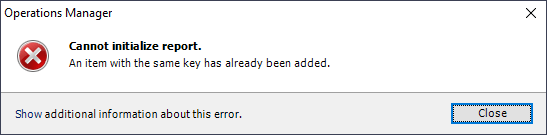

If you’ve implemented the above, your data warehouse database should populate with DPM data after a day or two; however, you probably won’t be able to view the report as it’ll show this error:

An item with the same key has already been added.

This occurs because the report selects the display name of DPM servers, and Microsoft have reused the class name of objects discovered by both the DPM 2012 R2 and 2016 management packs, so there’s essentially duplicate data in the data warehouse, and for some server names two entries are returned to the report. The fix is to either delete the redundant data, which gets a bit convoluted because of the foreign key references, or to simply rename the old objects. Again, the usual disclaimer at the beginning of this article applies: if you do this, your database will be unsupported.

DECLARE @DisplayNameSuffix NVARCHAR(MAX) = '(DPM 2012 R2)'

UPDATE

me

SET

me.DisplayName = me.DisplayName + ' ' + @DisplayNameSuffix

FROM

dbo.ManagedEntity me

INNER JOIN dbo.vManagedEntityManagementGroup memg

ON me.ManagedEntityRowId = memg.ManagedEntityRowId

INNER JOIN dbo.vManagedEntityType met

ON me.ManagedEntityTypeRowId = met.ManagedEntityTypeRowId

INNER JOIN dbo.vManagementPack mp

ON met.ManagementPackRowId = mp.ManagementPackRowId

WHERE

memg.ToDateTime IS NOT NULL

AND met.ManagedEntityTypeSystemName = 'Microsoft.SystemCenter.DataProtectionManager.2011.Library.DPMServer'

AND mp.ManagementPackSystemName = 'Microsoft.SystemCenter.DataProtectionManager.2012.Library'

AND me.DisplayName NOT LIKE '%' + @DisplayNameSuffix + '%'